GPU Matrix Transpose

19. November 2024

In one of my previous blog posts I analyzed the performance of different matrix transpose algorithms on the CPU. In this blog post, we are going to implement and benchmark two matrix transpose algorithms on the Graphics Processing Unit (GPU) using CUDA. The goal is to optimize the algorithms with respect to speed and throughput. First I describe the problem, then we look at the algorithm implementation and finally I present my benchmarking results. The complete project is available on GitHub. Feel free to check it out and contact me if you have any questions or comments.

CUDA Fundamentals

GPU's are great for parallelizing code and thus booting the performance. When performing matrix transposition, the different swaps of matrix elements are independent of each other. Therefore, matrix transpose algorithms could benefit from parallelization. Before I present the algorithms, let me first explain some CUDA fundamentals.

In a typical CUDA program, you have some data where you want to perform parallelized computations. The first thing you need to do is to move this data from the host memory (usually your PC's memory) to the GPU memory. The CUDA API provides this functionality

Nvidia, 2024 (Accessed 19.12.2024)

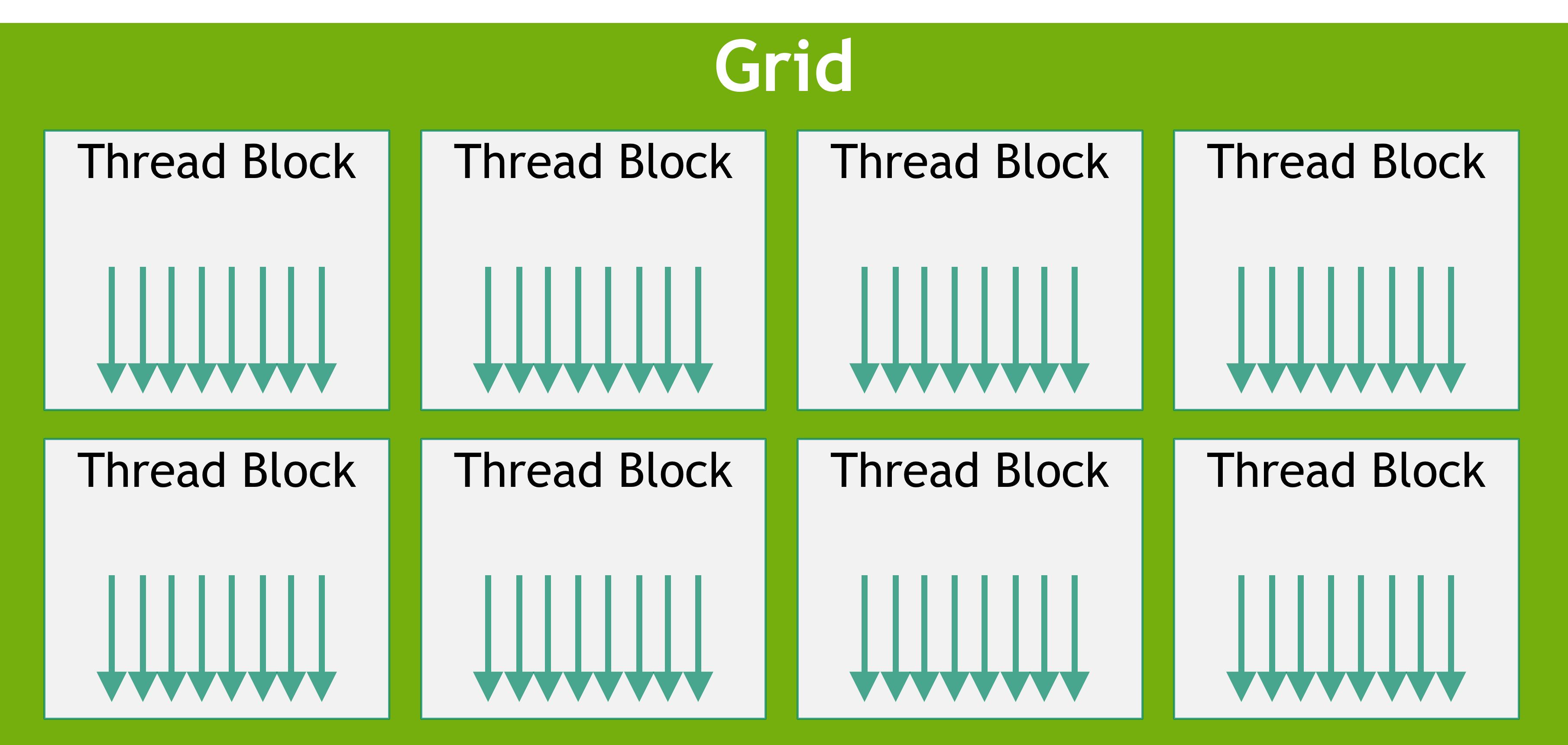

The last important concept to understand the blog post is Nvidia's GPU memory hierarchy. Before, we mentioned that a kernel launch creates multiple threads. Actually, CUDA provides a way to group threads into thread blocks. When launching a kernel, we need to specify the size of a thread block and the total number of thread blocks. All thread blocks are collected in a grid.

Threads from the same thread block have access to a shared memory which is significantly faster to access then the GPU's global memory. Therefore, it is essential for good performance to group threads that access the same memory regions into the same thread block. Obviously, I cannot cover all compute capabilities of the CUDA API, but with this knowledge you should be able to understand the following blog post. If you are intrested a better introduction, take a look at Nvidia C++ Programming Guide

Nvidia, 2024 (Accessed 19.12.2024)

Algorithms

The first kernel is a straightforward parallelization of the naive CPU algorithm from the previous blog posts. Instead of performing the swap of matrix elements in nested loops, each thread swaps one matrix element. Because each swap can be performed independently, no synchronization between threads in needed.

/*

params:

size: Size of matrix

mat: Matrix to transpose

*/

__global__

void transpose(int size, int* mat){

int x = blockIdx.x * blockDim.x + threadIdx.x;

int y = blockIdx.y * blockDim.y + threadIdx.y;

if (x < size && y < size && x < y) {

int tmp = mat[y * size + x];

mat[y * size + x] = mat[x * size + y];

mat[x * size + y] = tmp;

}

}The first algorithm computes the coordinates of the thread in the grid, i.e., the indices that a thread should swap. Note that the implementation uses two-dimensional block and grid sizes, as they can naturally map to the two-dimensional structure of matrices. The conditions and ensure that no thread swaps indices that are outside the matrix. The condition ensures that no duplicate swaps occur, which would result in the original matrix instead of the transposed matrix. If all conditions are satisfied, a standard in-place transposition is performed by the kernel function. Block and grid dimensions are computed according to the matrix size to ensure that all matrix elements get swapped, i.e., enough threads are launched. The drawback of the Algorithm first algorithm is that every memory access in the kernel function is to the global GPU memory. An optimized kernel function could use the shared memory of the GPU, since it has higher bandwidth and lower latency

Nvidia, 2024 (Accessed 05.06.2024)

/*

params:

size: Size of matrix

mat: Matrix to transpose

*/

__global__ void transpose(int size, int* matrix) {

__shared__ int tile[TILE_DIM][TILE_DIM+1];

int x = blockIdx.x * TILE_DIM + threadIdx.x;

int y = blockIdx.y * TILE_DIM + threadIdx.y;

for (int i = 0; i < TILE_DIM; i += blockDim.y) {

if (x < size && y + i < size) {

tile[threadIdx.y + i][threadIdx.x] = matrix[(y + i) * size + x];

}

}

__syncthreads();

for (int i = 0; i < TILE_DIM; i += blockDim.y) {

if (x < size && y + i < size) {

matrix[(y + i) * size + x] = tile[threadIdx.x][threadIdx.y + i];

}

}

}In the second algorithm, each block transposes a tile of the matrix. The kernel function uses a two-dimensional array in the GPUs shared memory. In the first loop, data from the matrix tile is loaded from the global memory into the shared memory. After synchronization, the second loop writes the transposed data back from shared memory to global memory. Synchronizing all threads of the block is needed because the threads inside a block cannot operate independently. Calling __syncthreads() ensures that the whole array in shared memory has been populated with the corresponding matrix element. Only after synchronization, the threads can continue to write the data to global memory. Otherwise, threads might write data into global memory that has not been loaded yet. Note that the size of the shared memory tile is TILE_DIM × TILE_DIM+1. This size is selected in order to avoid memory bank conflicts. Shared memory bank conflicts occur if two threads want to access the same shared memory bank at the same time. This results in serialized access, where the requests are processed one after another instead of in parallel, reducing performance. By offsetting each row with padding, the memory accesses are spread across different banks, reducing the chances of bank conflicts during shared memory access. This should result in better performance.

Experiments

For benchmarking the GPU implementations, the following setup was used. Both GPU algorithms were compiled with nvcc of the CUDA 12.5 module provided on the Marzola cluster of the University of Trento. To measure the speedup compared to the CPU implementations, the oblivious algorithm was compiled using -O3 optimizations. Each resulting binary was evaluated 50 times for matrix sizes between and . CUDA algorithms were also benchmarked with block sizes of 4, 8, 16 and 32. The grid size was computed using the matrix and block size. Time was only measured for the kernel execution, so the time for moving data from and to the GPU device is not contained in the measurements. The experiments were only conducted on the Marzola cluster of the University of Trento, since I had not access to other GPUs. On the cluster an Nvidia A30 GPU, with a theoretical peak bandwidth of 933GB/s was used to benchmark the implementations.

Let's look at the results obtained during the experiments. Note that the label 'GPU Tiled 16' stands for the second GPU algorithm with a block size of 16. 'GPU Navie' denotes the first GPU algorithm.

The most obvious observation is that GPU algorithms perform significantly better than the CPU algorithms. In every scenario, the GPU algorithms have a higher effective bandwidth, compared to the CPU algorithms. In the best case, the effective bandwidth of the GPU algorithm is 94x higher compared to the CPU algorithm. The second observation is that while the effective bandwidth decreases as matrix size increases for CPU algorithms, the effective bandwidth for GPU algorithms increases for bigger matrices. The third observation is that the difference in performance between the first and second GPU algorithm is quite small for smaller matrices, but grows as the matrix size increases. That means that for larger matrices, the usage of shared memory has a bigger effect on performance. The fourth observation is that the block size does affect the performance. In the experiments the optimal performance was achieved by using a block size of 16. Increasing the block size to 32 caused a drop in effective bandwidth for all GPU algorithms and matrix sizes.

Conclusion

The main observation made during this project was the significant increase in performance by using GPU acceleration. Using GPUs for parallelization can result in large improvements with respect to effective bandwidth and execution time. We also saw that a careful design of the GPU algorithm is needed. Different implementations and parameter choices can result in significant performance differences, even if the algorithm is executed on a GPU. The presented CUDA kernels could be further improved by using more advanced features of the CUDA platform, such as multiple streaming processors. Another approach could be to finetune the parameter choices. In my experiments, a block size of 16 was optimal, but further parameter choices could be investigated. A multi-GPU implementation could also imporve performance.

References

[1] Nvidia. "CUDA C++ Best Practices Guide", 2024 (Accessed 05.06.2024)

[2] Nvidia. "CUDA Toolkit Documentation", 2024 (Accessed 19.12.2024)

[3] Nvidia. "CUDA C++ Programming Guide", 2024 (Accessed 19.12.2024)